- Google DeepMind와 Google Research가 공동 개발한 공개형 경량 언어 모델 (SLM)

- 젬마라는 이름은 “보석(gem)”에서 따온 것으로, 경량화된 모델이지만 고성능을 발휘한다는 의미

- 개발자와 연구자들이 개인 PC나 GPU 인스턴스에서 활용할 수 있는 소형 모델이라는 점에서 ChatGPT나 Claude, Mistral 등과 같은 대형 언어모델 (LLM) 등과 차이 존재

🔹 핵심 개요

| 항목 | 내용 |

|---|---|

| 출시일 | 2024년 2월 |

| 개발사 | Google DeepMind + Google Research |

| 모델명 | Gemma 2B, Gemma 7B |

| 모델 타입 | Decoder-only, Transformer 기반 LLM |

| 학습 데이터 | 공개되지 않았지만, Gemini 모델 계열과 유사한 고품질 데이터 기반으로 추정 |

| 라이선스 | 오픈 가중치 제공 (상업적 사용 가능, 단 제한적 라이선스) |

🔹 주요 특징

1. 경량화와 고성능

- 2B(20억), 7B(70억) 파라미터 모델로 구성되어 있음.

- 단일 GPU (예: A100, L4 등)에서 추론 및 파인튜닝 가능.

- Gemma 7B는 LLaMA 2 7B 또는 Mistral 7B와 비슷하거나 더 나은 성능을 보임.

2. 최적화된 추론 및 파인튜닝

- NVIDIA GPU 및 Google Cloud TPU에서 최적화됨.

- Hugging Face Transformers, PyTorch, JAX, TFLite 등 다양한 프레임워크에서 사용 가능.

- QLoRA, LoRA 기반의 효율적 파인튜닝 지원.

3. 안전성과 책임감 있는 AI 개발

- Google의 AI 원칙에 기반한 안전성 평가 및 필터링 적용.

- 정제된 학습 데이터와 책임감 있는 사용자 가이드라인 포함.

🔹 Gemma 사용 예시

- 개인 프로젝트용 챗봇 개발

- 온디바이스 LLM 구축 (예: 모바일, 임베디드 기기)

- 자연어 처리 파인튜닝 실험 (예: 감성 분석, 텍스트 분류)

- AI 교육 및 연구용 LLM 모델 학습

🔹 Gemma vs 다른 경량 LLM 비교

| 모델 | 파라미터 수 | 성능 (MT-Bench 등) | 라이선스 | 비고 |

|---|---|---|---|---|

| Gemma 7B | 7B | LLaMA 2 7B 수준 이상 | 오픈 가중치 (제한적 상업용 허용) | Google 지원 |

| Mistral 7B | 7B | 매우 우수 | Apache 2.0 | 공개 범용 |

| LLaMA 2 7B | 7B | 우수 | Meta 상업용 허용 | |

| Falcon 7B | 7B | 중상 | Apache 2.0 | UAE 개발 |

| Phi-2 (MS) | 2.7B | 초경량, 성능 우수 | MIT 라이선스 | Microsoft |

🔹 사용 방법 예 (Python)

pythonCopyEditfrom transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b")

prompt = "Explain the theory of relativity in simple terms."

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

print(tokenizer.decode(outputs[0]))

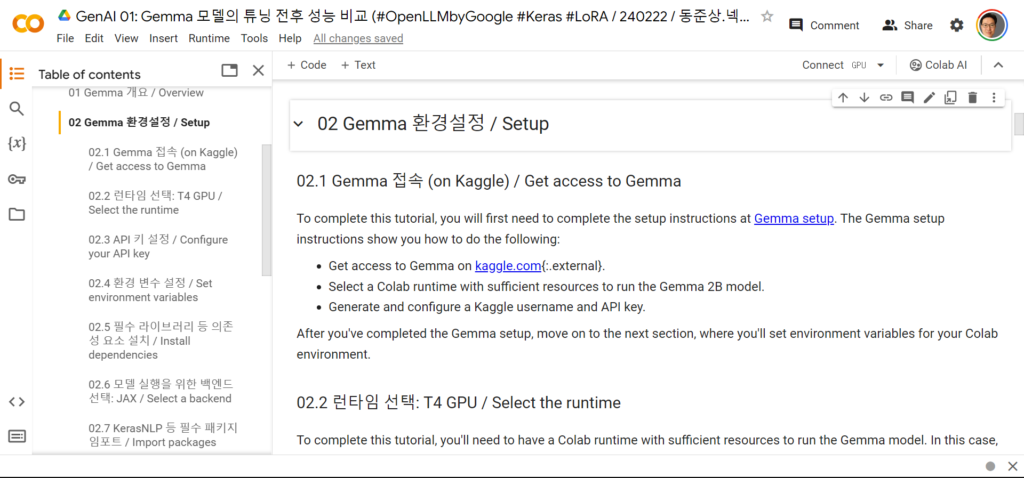

Colab Notebook | GenAI 01: Gemma 모델의 튜닝 성능 비교

#OpenLLMbyGoogle #Keras #LoRA / 240222 동준상.넥스트플랫폼 / ipynb

https://colab.research.google.com/drive/1pGBgSIf5EsbLLveStyaNIUIl8ea0KgcZ?usp=sharing

Prompt Engineering | Google Open Weights Gemma

Gemma: Open LLM by Google

- https://blog.google/technology/developers/gemma-open-models/

- https://ai.google.dev/gemma/?utm_source=keyword&utm_medium=referral&utm_campaign=gemma_cta&utm_content

🔹 참고 링크

- 공식 소개 페이지: https://ai.google.dev/gemma

- GitHub 및 Hugging Face 제공 모델: Hugging Face – Gemma

동준상.넥스트플랫폼

끝 | 감사합니다.